Deep learning has changed the way machines process information, but not all models work the same way. Two giants in the field—Transformers and Convolutional Neural Networks (CNNs)—approach problems differently, shaping the future of AI. CNNs, inspired by human vision, dominate image recognition, while Transformers, built for language processing, are redefining AI’s ability to understand context.

But their influence is expanding beyond their original domains, sparking debate over which model is superior. The answer isn’t straightforward. Understanding their differences isn’t just for researchers—it’s key to unlocking AI’s full potential. Let’s break down what sets them apart and where they shine.

Understanding the Core Architecture

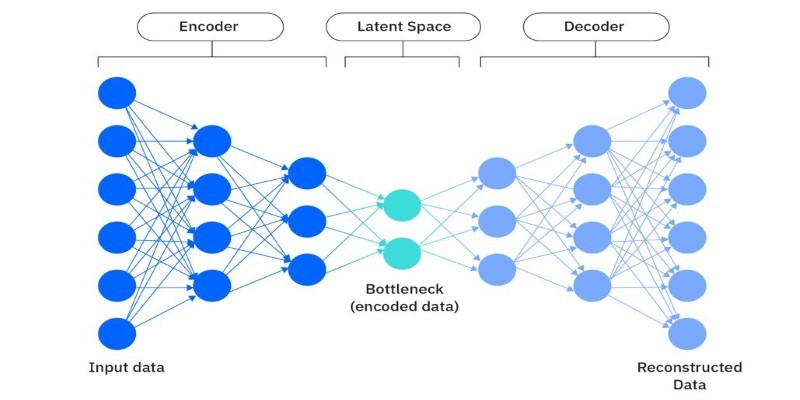

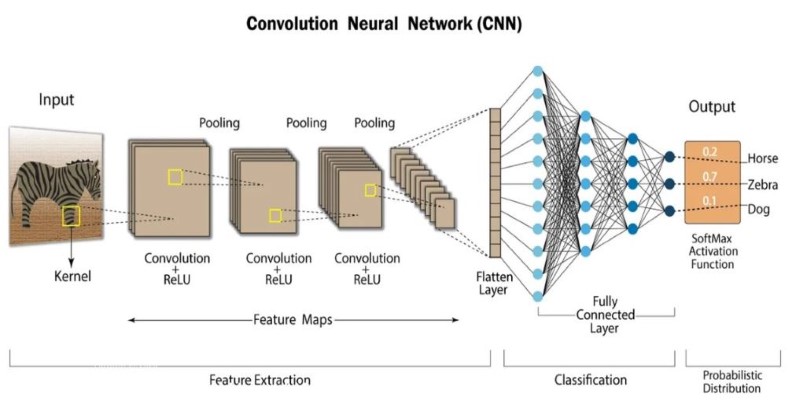

CNNs have been at the forefront of computer vision for years, inspired by how the human brain processes visual information. Convolutional layers are used to extract features from images, picking up edges, shapes, and textures hierarchically. Pooling layers reduce dimensionality while preserving key features, enhancing computational efficiency. The last fully connected layers recognize objects based on patterns extracted. This design renders CNNs very powerful in spatially aware tasks like medical images and face recognition.

Transformers, on the other hand, were originally intended to work with sequential data but have turned out to be extremely adaptable. Their central innovation is the self-attention mechanism, through which they can assign weights to the importance of various elements within a sequence. In contrast to CNNs, which work based on spatial hierarchies, Transformers work with all input data at once and, therefore, are extremely efficient at capturing long-range dependencies. This capability is extremely useful in language processing, where context is an important factor. Unlike older recurrent networks, Transformers are able to process entire sequences in parallel, which dramatically accelerates training times. Their scalability has helped them surpass older models in everything from machine translation to text generation. Although Transformers were originally developed for natural language processing, their architecture has since been applied to domains such as protein folding predictions and even image recognition via Vision Transformers (ViTs).

Strengths and Limitations

CNNs are excellent at recognizing visual patterns, making them indispensable for image classification, object detection, and facial recognition. Their ability to break down images into smaller patterns and process them hierarchically enables precise and efficient classification. CNNs are also computationally efficient when dealing with structured data, making them ideal for applications requiring real-time processing, such as self-driving cars and surveillance systems. However, CNNs struggle when it comes to understanding sequential relationships in data. Their reliance on fixed-size filters makes it difficult to capture dependencies over long distances, limiting their effectiveness in tasks like language modeling.

Transformers, on the other hand, excel in tasks requiring context awareness. Their self-attention mechanism allows them to understand relationships between words in a sentence, revolutionizing natural language processing. They have also begun to challenge CNNs in image recognition, with Vision Transformers outperforming traditional models in some cases. However, their biggest drawback is their computational cost. Training large-scale Transformer models requires vast amounts of data and processing power, making them resource-intensive. Additionally, their decision-making process is often difficult to interpret, which poses challenges in applications where transparency is crucial. Despite these limitations, Transformers have expanded the capabilities of AI, opening new possibilities beyond text processing.

Real-World Applications

CNNs continue to dominate the field of computer vision, finding applications in healthcare, security, and autonomous systems. They are widely used in medical imaging to detect abnormalities in X-rays and MRIs. Self-driving cars rely on CNNs for object detection and scene understanding, ensuring safe navigation. Facial recognition systems, fraud detection tools, and artistic style transfer also heavily depend on CNN-based architectures. Despite their growing competition with Transformers, CNNs remain the preferred choice for visual processing tasks that require efficiency and high accuracy.

Transformers, meanwhile, have transformed natural language processing. They power advanced chatbots, real-time language translation tools, and AI-generated content. Models like GPT have revolutionized content creation, enabling AI to write human-like text with remarkable coherence. Beyond language, Transformers are making an impact in areas like drug discovery and financial forecasting. Their ability to analyze patterns across vast datasets makes them useful for predicting market trends and optimizing logistics. Vision Transformers are also challenging CNN dominance in image recognition, with some models achieving state-of-the-art performance in classification tasks. As research continues, the role of Transformers in AI is expected to expand further, making them a critical component of future technological advancements.

Evolving Trends in AI: The Future of Transformers and CNNs

Deep learning is rapidly advancing, with CNNs and Transformers evolving to meet new challenges. Researchers are developing hybrid models that blend CNNs’ feature extraction with Transformers’ attention mechanisms, enhancing image recognition and efficiency. Vision Transformers (ViTs) are already competing with CNNs in computer vision, suggesting a shift in AI model dominance. Meanwhile, improvements in hardware, such as AI accelerators, are helping mitigate the high computational demands of Transformers, making them more accessible.

CNNs remain indispensable for tasks requiring speed and spatial awareness, while Transformers continue to redefine NLP and sequential data processing. As AI applications expand, both architectures will likely coexist, each optimizing performance in its specialized domain. The future will see greater integration of these models, with AI systems leveraging their strengths to achieve unprecedented accuracy and efficiency. The ongoing evolution of deep learning ensures a dynamic and competitive AI landscape.

Conclusion

Both Transformers and Convolutional Neural Networks are revolutionary in their own right, each excelling in different domains. CNNs remain the gold standard for image-related tasks, leveraging their hierarchical structure to extract features efficiently. Meanwhile, Transformers have changed the landscape of NLP and are now expanding into new areas, offering unparalleled scalability and flexibility. Choosing between the two depends on the problem at hand—CNNs for structured image data and Transformers for complex dependencies in text and beyond. As AI advances, the interplay between these models will likely shape the future of deep learning.