Are vector embeddings just some fancy AI jargon? Think again! These powerful little data transformers are “quietly” revolutionizing (because let’s be honest, 99% of the world doesn’t know what they are) how we interact with tech, often in ways you’d never expect. From Netflix’s ‘eerily’ accurate recommendations to semantic search. In this post, we’re diving into 7 real-world uses of vector embeddings that’ll make you say, ‘Wait, that’s how that works?!’ Spoiler: You’ve probably used them today without even realizing it. Let’s get in!

1. Search That "Actually Gets You" (Semantic Search)

You know how you type something into Google (or your internal work tool) and it just... knows what you meant—even if the words weren’t exact? That’s vector embeddings doing the heavy lifting.

Instead of searching for “just the words,” semantic search uses embeddings to find results based on meaning. So even if you search “cheap flights to LA,” it can match content that says “budget airfare to Los Angeles.” (Different words, same vibe.)

This shows up everywhere now—from shopping sites to company wikis to help docs. The search engine doesn’t need a perfect match... it needs to "understand" what you're getting at. Vector embeddings are how it does that.

And hey, if you’ve ever used a search tool that felt psychic? Now you know the trick. It’s vectors.

2. Content Recommendation

Ever wonder how apps like Spotify know what song to play next? Or how YouTube queues up another video you actually want to watch?

That’s vector embedding working behind the scenes once again.

Basically, each song, video, or article gets turned into a kind of digital fingerprint—a vector. And your behavior (what you’ve clicked, liked, skipped, etc.) gets turned into a vector too. The system compares your “taste vector” with content vectors to find the closest matches. Boom, recommendations that make you go: “...Yeah okay, that’s a good one.”

This isn’t just for streaming though. Even product recommendations (think Amazon) or “people you may know” on LinkedIn... all rely on this kind of similarity math.

So yeah, vector embeddings might be more in tune with your vibe than your friends sometimes.

3. Chatbots (Contextual Understanding)

Okay, let’s talk about those chatbots. Not the annoying kind from back in the day. We’re talking the newer ones.

Modern bots use vector embeddings to understand your question in context, match it to relevant knowledge, and respond appropriately (most of the time, anyway). They don’t just react to keywords. They try to “get” what you mean.

So when you ask your bank’s chatbot, “How do I change my debit card PIN?” even if you phrase it as “update my card code thingy,” it can still find and return the right information.

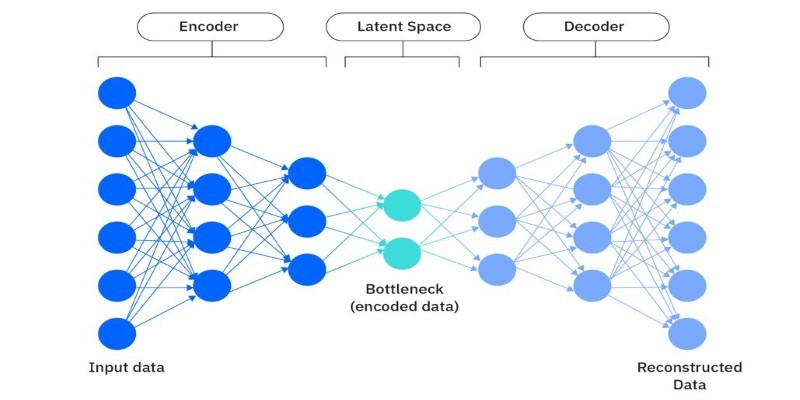

Behind the curtain? Embeddings turn your messy, human sentence into a clean, math-y vector. And it finds other "close-enough" vectors in its training data to respond. That’s how it stays relevant.

4. Finding Duplicates (Even If They’re Not Exactly the Same)

Ever had a ton of documents or photos that are kind of the same, but not quite? Vector embeddings help with deduplication, but smarter.

Let’s say you’re managing a big collection of product descriptions. Some are rewritten versions of each other, some are almost copy-pasted, and some just reworded slightly over time.

Instead of manually comparing them (ugh), systems can turn each doc into a vector and compare how close they are in meaning. If two vectors are super close? They’re probably duplicates (or close enough to be flagged).

This is clutch for cleaning up messy databases, merging files, or even auditing things like support tickets or policy docs.

No more reading every line like it’s 2003 and you’re manually proofreading a Word doc. Let vectors help.

5. Security and Fraud Detection

Weird one, but powerful—vector embeddings are getting used in cybersecurity now. (Yeah, really.)

Here’s the deal: suspicious behavior usually follows a pattern. And instead of listing every rule manually, systems are starting to use embeddings to spot similarities in behavior, even when it’s not a perfect repeat.

For example, someone logs in from five places in one hour... and tries odd things with passwords. Embedding models can map that user’s behavior as a vector and compare it with known attack patterns. If it’s “too close,” the system raises a flag.

It’s not bulletproof, but it helps fill in the gaps where traditional rules miss stuff.

Same with fraud, like unusual purchases or account changes. If the vector “feels” off compared to past activity, it might trigger extra verification. Not magic. Just math.

6. Image Search That Understands What’s In the Image

Ever used Google Lens or Pinterest’s “visual search” tool? (You point your phone at a chair and boom—similar products pop up.) That’s image embedding in action.

Instead of matching image files based on metadata (like names or tags), systems now analyze the actual content of the image and turn it into—yep—a vector. This lets them compare photos by what’s in them, not what they’re called.

It’s how your phone groups faces. How shopping sites show “visually similar” products. And even how museums digitize and match paintings (super cool use case, btw).

You don’t need a perfect match anymore. You just need a vibe match. Vector embeddings make it happen.

7. Multilingual Uses (Breaking Down Language Barriers in Apps)

This one’s a sleeper hit—cross-lingual embeddings.

Let’s say you’re building an app that has to deal with multiple languages (English, Spanish, Japanese, Urdu—you name it). Instead of writing different rules for each language, embedding models can map different languages to a shared meaning space.

So “apple” in English, “manzana” in Spanish, and “りんご” in Japanese can all end up close together in vector space. Which means the system understands they’re the same thing—even if it’s never seen them side-by-side.

Why does that matter?

Because it powers real-time translation, smarter cross-language search, and apps that feel way more seamless across borders. (And let’s be honest... language tools used to be rough. This stuff is fixing that.)

This also helps global customer support teams, content moderation, and even search engines that work across languages.

Final Thoughts: So... Why Should You Care?

We get it. “Vector embeddings” doesn’t sound exciting. But that’s kind of the point. They're behind-the-scenes, doing quiet heavy lifting—making your apps smarter, your searches better, and your tools feel way more human than they used to.

Whether you're a techie or just someone who appreciates when things “just work”... embeddings are part of the reason they do.

So next time YouTube recommends a perfect video, or your company search bar gets your mind, you know what’s going on.