Artificial intelligence has revolutionized how machines process data, but not all data is static. Some information evolves, requiring specialized handling. Recurrent Neural Networks (RNNs) are designed for sequential tasks, remembering past inputs to enhance decision-making. This makes them ideal for applications like speech recognition, language translation, and stock market predictions.

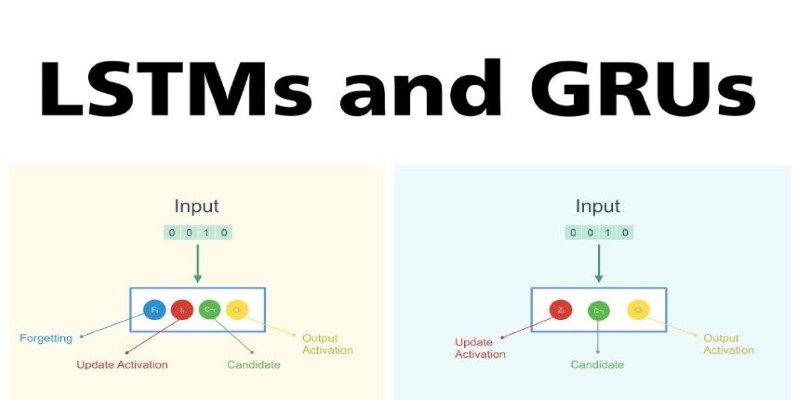

By preserving context, RNNs are particularly good at natural language processing and predictive modeling. Nevertheless, they fail at handling long-term dependencies, where newer models such as Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU) came into being. Knowledge of RNNs is crucial to keeping up with contemporary AI and deep learning developments.

What Is a Recurrent Neural Network?

A Recurrent Neural Network (RNN) is an artificial neural network intended for the processing of sequential data. In contrast to standard neural networks, where each input is processed separately, RNNs have a memory between past and present inputs. This makes them well-suited for time-related tasks where information order is crucial.

Fundamentally, an RNN contains a feedback loop, allowing information to persist. Every neuron in an RNN receives not just input from outside but from its history as well, and this sets up a memory. This allows RNNs to identify sequences in data. An easy comparison would be having someone read a book—each word is learned not independently but in relation to the words surrounding it.

This repeated character enables RNNs to handle tasks like speech-to-text translation, in which the pronunciation of a word is a function of the words that precede and follow it. In financial prediction, RNNs are able to scan past market trends to forecast future stock prices. Though as effective as they are, conventional RNNs have challenges with long sequences because of an issue referred to as the vanishing gradient, which renders learning long-term relationships hard.

How Recurrent Neural Networks Work?

An RNN structure resembles that of a regular neural network but differs significantly—it has a feedback loop. Instead of processing each data point independently, RNNs store past information in hidden states and use it in future computations.

Here's how the process unfolds:

- Input Processing: The network takes an input, such as a word in a sentence or a frame in a video.

- Hidden State Update: The network updates its memory by combining the current input with the previous hidden state.

- Output Generation: The RNN produces an output based on the processed information.

- Looping Mechanism: The updated hidden state is carried forward to influence the next input.

This structure allows RNNs to process sequential data, but it also presents challenges. One key issue is the vanishing gradient problem, where information from earlier steps gradually diminishes as it moves through the network. This makes it difficult for RNNs to learn long-term dependencies.

To counter this, researchers developed advanced architectures like Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU). These models introduce mechanisms to selectively retain or discard information, allowing them to handle longer sequences more effectively.

Applications of Recurrent Neural Networks

Recurrent Neural Networks are widely used in fields where understanding sequences is crucial. Their ability to recognize patterns in time-dependent data makes them indispensable in various industries.

Natural Language Processing (NLP): RNNs power applications like machine translation, sentiment analysis, and text generation, enabling AI chatbots and voice assistants to process input and generate relevant responses efficiently.

Speech Recognition: Voice assistants like Siri and Google Assistant rely on RNNs to transcribe spoken words into text. The network analyzes the sequence of sounds, recognizing words based on their context within a sentence.

Financial Forecasting: RNNs process historical data to identify patterns for stock market predictions, sales forecasting, and algorithmic trading, enabling investors to make informed decisions based on past trends and market behavior.

Healthcare and Medical Diagnosis: In healthcare, RNNs predict diseases by analyzing patient history and medical records, helping doctors identify early signs of conditions such as heart disease or diabetes through pattern recognition.

Autonomous Vehicles: Self-driving cars utilize RNNs to process sensor data over time, understand object movement, and make driving decisions for tasks like lane detection and obstacle avoidance through sequential processing.

Limitations and Future of Recurrent Neural Networks

Despite their versatility, Recurrent Neural Networks are not without flaws. The most significant challenge is their difficulty in handling long-term dependencies due to the vanishing gradient problem. When processing long sequences, the information from earlier steps can become too weak to influence later decisions.

To overcome this, researchers introduced LSTMs and GRUs, which are designed to store important information over longer sequences. However, even these improved models can be computationally expensive, requiring significant processing power.

An alternative to RNNs is the Transformer model, which has revolutionized deep learning, particularly in NLP. Unlike RNNs, Transformers process sequences in parallel rather than sequentially, making them faster and more efficient. This has led to breakthroughs like GPT (Generative Pre-trained Transformer), which powers advanced AI chatbots and text generation models.

While RNNs are still valuable for many applications, their role in AI is evolving. As new architectures emerge, their use will likely shift to more specialized tasks where their sequential processing remains advantageous, particularly in time-series analysis, speech recognition, and certain predictive modeling scenarios.

Conclusion

Recurrent Neural Networks have significantly advanced artificial intelligence by enabling machines to process sequential data efficiently. Their ability to retain past information has made them crucial in applications like speech recognition, financial forecasting, and natural language processing. However, challenges like the vanishing gradient problem have led to improvements with LSTMs and GRUs, while Transformers now dominate many AI tasks. Despite these advancements, RNNs remain relevant for specific applications where sequential learning is essential. As AI evolves, RNNs will continue to contribute to innovations, proving that memory-based neural networks still have a place in modern technology and data-driven decision-making.